Table of contents

Terraform Deployment

- Download the Terraform configuration package from GitHub. Make sure you go through the release page and see what’s included in the current release on CDCgov/NEDSS-Infrastructure

- Open bash/mac/cloudshell/powershell and unzip the current version file downloaded in the previous step named nbs-infrastructure-vX.Y.Z zip.

- Create a new directory with an easily identifiable name e.g nbs7-mySTLT-test in /terraform/aws/ to hold your environment specific configuration files

- Copy terraform/aws/samples/NBS7_standard to the new directory and change into the new directory (Note: the samples directory contains other options than “standard”, view the README file in that directory to chose most appropriate starting point)

cp -pr terraform/aws/samples/NBS7_standard/* terraform/aws/nbs7-mySTLT-test cd terraform/aws/nbs7-mySTLT-testNOTE: Before editing

terraform.tfvarsandterraform.tffiles below, you may reference detailed information for each TF module under theterraform/aws/app-infrastructurein a readme file in each modules directory. Do not edit files in the individual modules. - Update the

terraform.tfvarsandterraform.tfwith your environment-specific values by following the instructions here - Review the inbound rules on the security groups attached to your database instance and ensure that the CIDR you intend to use with your NBS 7 VPC (

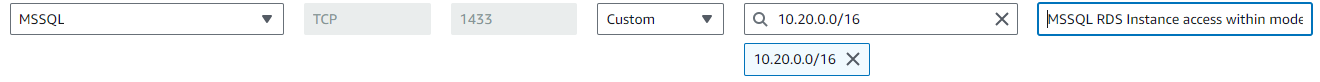

modern-cidr) is allowed to access the database.- a. For e.g if the

modern-cidris10.20.0.0/16, there should be at least one rule in a security group associated to your database that allows MSSQL inbound access from yourmodern-cidrblock

- a. For e.g if the

- Make sure you are authenticated to AWS. Confirm access to the intended account using the following command. (More information about authenticating to AWS can be found at the following link.)

$ aws sts get-caller-identity { "UserId": "AIDBZMOZ03E7R88J3DBTZ", "Account": "123456789012", "Arn": "arn:aws:iam::123456789012:user/lincolna" } - Terraform stores its state in an S3 bucket. The commands below assume that you are running Terraform authenticated to the same AWS account that contains your existing NBS 6 application. Please adjust accordingly if this does not match your setup.

- a. Change directory to the account configuration directory if not already, i.e. the one containing

terraform.tfvars, andterraform.tfcd terraform/aws/nbs7-mySTLT-test - b. Initialize Terraform by running:

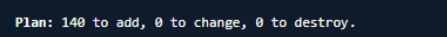

terraform init - c. Run “terraform plan” to enable it to calculate the set of changes that need to be applied:

terraform plan

- d. Review the changes carefully to make sure that they 1) match your intention, and 2) do not unintentionally disturb other configuration on which you depend. Then run “terraform apply”:

terraform applye. If terraform apply generates errors, review and resolve the errors, and then rerun step d.

- a. Change directory to the account configuration directory if not already, i.e. the one containing

- Verify Terraform was applied as expected by examining the logs

- Verify the newly created VPC and subnets were created as expected and confirm that the CIDR blocks you defined exist in the Route Tables

- Verify the EKS Kubernetes cluster was created by selecting the cluster and inspecting Resources->Pods, Compute (expect 30+ pods at this point, and 3-5 compute nodes as per the min/max nodes defined in terraform/aws/app-infrastructure/eks-nbs/variables.tf)

- Now that the infrastructure has been created using Terraform, deploy Kubernetes support services in the Kubernetes cluster via the following steps

- a. Start the Terminal/command line:

- i. Make sure you are still authenticated with AWS (reference the following Configuration and credential file settings).

- ii. Authenticate into the Kubernetes cluster (EKS) using the following command and the cluster name you deployed in the environment

aws eks --region us-east-1 update-kubeconfig --name <clustername> # e.g. cdc-nbs-sandbox - iii. If the above command errors out, check

- There are no issues with the AWS CLI installation

- You have set the correct AWS environment variables

- You are using the correct cluster name (as per the EKS management console)

- b. Run the following command to check if you are able to run commands to interact with the Kubernetes objects and the cluster.

kubectl get pods --namespace=cert-managerThe above command should return 3 pods. If it doesn’t refresh the AWS credentials and repeat steps in 12 a.

kubectl get nodesThe above command should list the worker nodes for the cluster.

- a. Start the Terminal/command line:

- Congratulations! You have installed your core infrastructure and Kubernetes cluster! Next, we will configure your cluster using helm charts.